You can use the SIDB2 tool for compacting the data files available in the DDB and to prevent the disk drives of the DDB from running out of disk space. In case of multiple DDB partitions, run the compaction on all the partitions. You can compact the partitions one after the other or simultaneously. Running this tool periodically is not required as the system reclaims the space automatically during the data aging operations. Run the DDB compaction if it is recommended by the support team.

There are two types of compaction possible on DDBs.

-

Compacting the primary and other core files.

The

compactoption compresses the data files, such as property.dat, primary.dat, zeroref.dat, and archive.dat, available in the DDB location and rebuilds their indexes. Optionally, you can use thecompactfileoption to compact a specific data file available in the DDB location (property.dat, primary.dat, zeroref.dat, and archive.dat).Note

To run the compacting process, the free space must be double the size of the largest data file. If the free space is not sufficient, then the compacting process might fail and the DDB might get corrupted.

-

Compacting the secondary files.

This operation is required as a preparatory step for converting older DDBs into the DDBs that support the Garbage Collection feature. Compacting of secondary files is effective for DDBs that were created prior to SP14. DDBs created after SP14 are automatically configured to support the Garbage Collection feature.

The

compactfile secondaryoption splits the secondary files and reclaims the space for information related to aged backup jobs.Note

Ensure that, to run the compacting process, the free space is the size of the largest secondary file.

Tips:

-

Space reclamation will not happen if the associated storage policies have Infinite retention set.

-

We recommend you to run a new DDB backup after running the compaction as the previous DDB backups are marked as invalid.

-

When you run the DDB compaction process, if a firewall or network configuration is in place, you must start the Network Daemon service manually, else the compaction process might fail.

-

The time taken to compact a 500GB deduplication database is approximately 4 hours.

Supported DDB versions:

-

v10 DDBs on v11 MediaAgent

-

v11 DDBs on v11 MediaAgent

Before You Begin

Caution

During the compaction process, the DDB is unavailable and any job that requires interaction with DDB goes into a pending state.

-

Make sure that the following jobs are not running against the DDB:

-

Backup jobs

-

DDB backup jobs

-

DDB move

-

DDB reconstruction

-

Auxiliary copy jobs

-

-

Ensure that on the Windows Command Prompt Properties dialog box, the QuickEdit Mode is disabled.

Procedure

-

Log on to the MediaAgent computer that is hosting the DDB.

-

Stop all the jobs that are running for the DDB.

-

Ensure that there is no SIDB2 process running on the MediaAgent.

Important

If the SIDB2 process is running, then do not kill the process. You must wait for the process to stop on its own before you proceed to the next step.

-

For Windows, verify the status on the Processes tab of the Windows Task Manager dialog box.

-

For Linux, run the commvault list command.

-

-

On the command line, go to the software_installation_directory/Base folder, and run the following command.

Windows

compact option

sidb2 -compact -in <instance_number> -cn <client_name> -i <DDB_ID> -split <split_number>

compactfile option

sidb2 -compactfile <Primary/Zeroref/property/archive> -in <instance_number> -cn <client_name> -i <DDB_ID> -split <split_number>

-compactfile secondary

sidb2 -compactfile secondary -i <DDB_ID> -split <split_number>

Linux

compact option

./sidb2 -compact -in <instance_number> -cn <client_name> -i <DDB_ID> -split <split_number>

compactfile option

./sidb2 -compactfile <Primary/Zeroref/property/archive> -in <instance_number> -cn <client_name> -i <DDB_ID> -split <split_number>

-compactfile secondary

./sidb2 -compactfile secondary -i <DDB_ID> -split <split_number>

Where

|

Options |

Descriptions |

|---|---|

|

-compact |

The keyword to reduce the size of the DDB. |

|

-compactfile |

The keyword to reduce the size of a specific data file of the DDB, such as such as property.dat, primary.dat, zeroref.dat, and archive.dat. |

|

-compactfile secondary |

The keyword to create a single archive file per secondary file. |

|

-in |

The instance of the software that uses the tool. |

|

-cn |

The client name of the MediaAgent that hosts the DDB. |

|

-i |

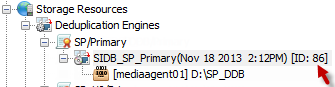

The ID of the DDB. You can view the ID can be viewed from the Deduplication Engines node in the CommCell Browser.

|

|

-split |

The partition number of the DDB. You can view the split number by accessing the path of the DDB. For example, in the following location, the split number is 01. E:\<DDB Folder>\CV_SIDB\2\86\Split 01 |

Examples

To compact the split 01 of DDB with ID as 86, located on DDBMediaAgent01, run the following command:

sidb2 -compact -in Instance001 -cn DDBMediaAgent01 -i 86 –split 01

To compact the Primary file of a DDB with ID as 1288, run the following command:

sidb2 -compactfile primary -i 1288 –split 00

To compact the DDB by creating a single archive file per secondary file with DDB ID as 2370, run the following command:

sidb2 -compactfile secondary -i 2370 -split 00

Output

The following information is a sample of the output for compact option.

sidb2 -compact -in Instance001 -cn DDBMediaAgent01 -i 86 –split 01 Getting information for engine [86]... Compacting property file. Recs [91] Compressing data file. 98% (90/91) Rebuilding index file. 98% (90/91) Writing index file... 98% (90/91) Compacting primary file. Recs [2854865] Compressing data file. 100% (2854865/2854865) Rebuilding index file. 100% (2854865/2854865) Writing index file... 100% (2854865/2854865) Rebuilding additional index #1. 100% (2854865/2854865) Writing index file... 100% (2854865/2854865) Compacting zeroref file. Recs [45534] Compressing data file.. 99% (45530/45534) Rebuilding index file. 99% (45530/45534) Writing index file... 99% (45530/45534) Rebuilding additional index #1. 99% (45530/45534) Writing index file... 99% (45530/45534) Compacting archive file. Recs [89] Compressing data file. 95% (85/89) Rebuilding index file. 95% (85/89) Writing index file... 95% (85/89) Compacting completed successfully

The following information is a sample of the output for compactfile option.

sidb2 -compactfile primary -i 1288 –split 00 Getting information for engine [1288]... Compacting primary file. Recs [1240] Compressing data file. 100% (1240/1240) Rebuilding index file. 100% (1240/1240) Writing index file... 100% (1240/1240) Rebuilding additional index #1. 100% (1240/1240) Writing index file... 100% (1240/1240) Compacting completed successfully

The following information is a sample of the output for compactfile secondary option.

sidb2 -compactfile secondary -i 2370

Getting information for engine [2370]...

Compacting secondary [Secondary_30440.idx]

Compacting secondary [Secondary_30441.idx]

Compacting secondary [Secondary_30442.idx]

Compacting secondary [Secondary_30443.idx]

Compacting secondary [Secondary_30444.idx]

Compacting secondary [Secondary_30445.idx]

Compacting secondary [Secondary_30446.idx]

Compacting secondary [Secondary_30447.idx]

Compacting secondary [Secondary_30448.idx]

Compacting secondary [Secondary_30449.idx]

Compacting secondary [Secondary_30450.idx]

Compacting secondary [Secondary_30451.idx]

Compacting secondary [Secondary_30452.idx]

Compacting completed successfully